Background

Fluent Bit is an open source and multi-platform log processor tool which aims to be a generic Swiss knife for logs processing and distribution.

It is included with several distributions of Kubernetes, and is used to pull log messages from multiple sources, modify them as needed, and send the records to one or more output destinations. It is amazingly customizable, so you can do just about any processing you want, with a couple of idiosyncracies, one of which I'll describe here.

The Challenge

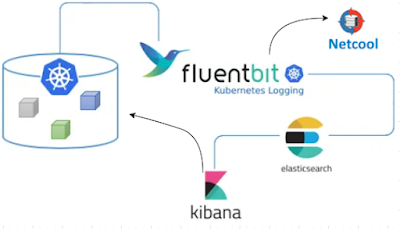

What if you have a log message that you want to handle in two different ways:

1. Normalize the fields in the log message for storage in ElasticSearch (or Splunk, etc.).

2. Modify the log message so it has all of the appropriate fields needed for processing by your Netcool environment (fields that you don't necessarily want in your log storage system).

The Solution

Based on all of the unique restrictions in Fluent Bit, what you need to do is create a new copy of the log message, preserving the original so that the original can go through your "standard" processing, and the new message can be processed according to your needs in Netcool.

The specifics of this solution are to use a rewrite_tag FILTER to create a new, distinct copy of the message with a custom tag within the Fluent Bit pipeline, and then configure the appropriate additional FILTERs and OUTPUTs that only Match this new, custom tag. You also need to modify any existing OUTPUTs to exclude this new tag.

Here's a high-level graphic showing what we're going to do:

Our rewrite_tag FILTER is going to match all tags beginning with "kub". This will exclude our new tag, which will be "INC". So after the rewrite_tag filter, there will be two messages in the pipeline: the original plus our new one with our custom "INC" tag. We can the specify the appropriate Match statements in later FILTERs to only match the appropriate tag. So in the ES output above, the Match_Regex statement is:

Match_Regex ^(?!INC).*

The official name of the above is a "lookahead exclude". Go ahead and try it out at regex101.com if you want. It will match any tag that does NOT begin with "INC", which is the custom tag for our new messages that we want to send tou our HTTP Message Bus probe.

The rewrite_tag FILTER will be custom for your environment, but the following may be close in many cases. For my case, I want to match any message that has a log field containing the string "ERROR writing to". You'll have to analyze your current messages to find the appropriate field and string that you're interested in. But here's my rewrite_tag FILTER stanza:

[FILTER]

Name rewrite_tag

Match_Regex ^(?!INC).*

Rule $log ^.*Error\swriting\sto.* INC true

The "Rule" statement is the tricky part here. This statement consists of 4 parts, separated by whitespace:

Rule - the literal string "Rule"

$log - the name of the field you want to search to create a new message, preceded by "$". In this case, we want to search the field named log.

^.*Error\swriting\sto.* - the regular expression we want to match in the specified field. This regular expression CANNOT CONTAIN SPACES. That's why I'm using "\s".

INC - this is the name of the tag to set on the new message. This tag is ONLY used within the Fluent Bit pipeline, so it can literally be anything you want. I chose "INC" because these messages will be sent to the Message Bus proble to eventually create incidents in ServiceNow.

true - this specifies that we want the KEEP the original message. This allows it to continue to be processed as needed.

After you have the rewrite_tag FILTER in place, you will have at least one additional FILTER of type "modify" in your pipeline to allow you to add fields, rename fields, etc. You'll then have an OUTPUT stanza of type "http" to specify the location of the Message Bus probe. Something like the following:

[OUTPUT]

Name http

port 80

Match INC

host probehost

uri /probe/webhook/fluentbit

format json

json_date_format epoch

The above specifies that the URL that these messages will be sent to is

http://probehost:80/probe/webhook/fluentbit

In the json that's sent in the body of the POST request, there will be a field named date , and it will be in Unix "epoch" format, which is an integer representing the number of seconds since the beginning of the current epoch (a "normal" Unix/Linux timestamp).

That's it. That's all of the basic configuration needed on the Fluent Bit side.

Extra Credit/TLS Config

If your Message Bus probe is using TLS, you just need to add the following two lines to the above OUTPUT stanza:

tls On

tls.verify Off

The first line enables TLS encryption, and the second line is a shortcut that allows the connection to succeed without having to add the appropriate certificates to Fluent Bit - it will accept any certificate presented to it by the Message Bus probe, even a self-signed certificate.