Thursday, March 30, 2023

Sending Kibana (free/open source) Alerts via Webhook Using Fluent-Bit (free)

Wednesday, March 29, 2023

Modify kibana.yml after deploying Kibana with Helm

kubectl exec --stdin --tty kibana_podname -- /bin/bash

you'll find that there's no editor available (like vi or even ed). You can cat config/kibana.yml, but the comments state that it is auto-generated. So what are you supposed to do to add an a setting to the file? For example, you might need to add a value for xpack.encryptedSavedObjects.encryptionKey so you can configure alerting.

The solution I came up with is a multi-step process:

1. Get the default values.yaml file for the chart and store that in a file with the command:

helm show values elastic/kibana > /tmp/kibana.yaml

2. Edit that file to add a section for kibana.yml under kibanaConfig. Originally, kibanaConfig is empty (set to {}). You need to change it to be something like:

helm uninstall kibana

3. Then install the helm chart again with:

helm install kibana elastic/kibana -f /tmp/kibana.yaml

And that's it. Your changes will be applied and you're good to go.

I'm pretty sure there's a way to create a configMap and reference it, which would then allow you to just delete the pod to have it re-read the configMap, but I haven't figured out those exact details. Maybe in another post.

Wednesday, February 8, 2023

Configuring Fluent Bit to send messages to the Netcool Mesage Bus probe

Background

Fluent Bit is an open source and multi-platform log processor tool which aims to be a generic Swiss knife for logs processing and distribution.

It is included with several distributions of Kubernetes, and is used to pull log messages from multiple sources, modify them as needed, and send the records to one or more output destinations. It is amazingly customizable, so you can do just about any processing you want, with a couple of idiosyncracies, one of which I'll describe here.

The Challenge

What if you have a log message that you want to handle in two different ways:

1. Normalize the fields in the log message for storage in ElasticSearch (or Splunk, etc.).

2. Modify the log message so it has all of the appropriate fields needed for processing by your Netcool environment (fields that you don't necessarily want in your log storage system).

The Solution

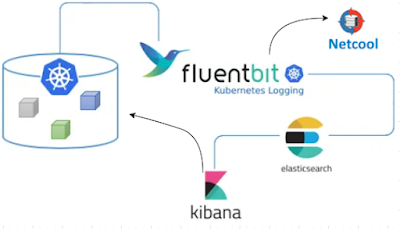

Here's a high-level graphic showing what we're going to do:

Our rewrite_tag FILTER is going to match all tags beginning with "kub". This will exclude our new tag, which will be "INC". So after the rewrite_tag filter, there will be two messages in the pipeline: the original plus our new one with our custom "INC" tag. We can the specify the appropriate Match statements in later FILTERs to only match the appropriate tag. So in the ES output above, the Match_Regex statement is:

Match_Regex ^(?!INC).*

The official name of the above is a "lookahead exclude". Go ahead and try it out at regex101.com if you want. It will match any tag that does NOT begin with "INC", which is the custom tag for our new messages that we want to send tou our HTTP Message Bus probe.

The rewrite_tag FILTER will be custom for your environment, but the following may be close in many cases. For my case, I want to match any message that has a log field containing the string "ERROR writing to". You'll have to analyze your current messages to find the appropriate field and string that you're interested in. But here's my rewrite_tag FILTER stanza:

[FILTER]

Name rewrite_tag

Match_Regex ^(?!INC).*

Rule $log ^.*Error\swriting\sto.* INC true

The "Rule" statement is the tricky part here. This statement consists of 4 parts, separated by whitespace:

Rule - the literal string "Rule"

$log - the name of the field you want to search to create a new message, preceded by "$". In this case, we want to search the field named log.

^.*Error\swriting\sto.* - the regular expression we want to match in the specified field. This regular expression CANNOT CONTAIN SPACES. That's why I'm using "\s".

INC - this is the name of the tag to set on the new message. This tag is ONLY used within the Fluent Bit pipeline, so it can literally be anything you want. I chose "INC" because these messages will be sent to the Message Bus proble to eventually create incidents in ServiceNow.

true - this specifies that we want the KEEP the original message. This allows it to continue to be processed as needed.

After you have the rewrite_tag FILTER in place, you will have at least one additional FILTER of type "modify" in your pipeline to allow you to add fields, rename fields, etc. You'll then have an OUTPUT stanza of type "http" to specify the location of the Message Bus probe. Something like the following:

[OUTPUT]

Name http

port 80

Match INC

host probehost

uri /probe/webhook/fluentbit

format json

json_date_format epoch

The above specifies that the URL that these messages will be sent to is

http://probehost:80/probe/webhook/fluentbit

In the json that's sent in the body of the POST request, there will be a field named date , and it will be in Unix "epoch" format, which is an integer representing the number of seconds since the beginning of the current epoch (a "normal" Unix/Linux timestamp).

That's it. That's all of the basic configuration needed on the Fluent Bit side.

Extra Credit/TLS Config

Tuesday, February 5, 2019

A great video on deploying and operating Kubernetes at scale

https://www.youtube.com/watch?v=8edDcy3oeUo

Tuesday, December 4, 2018

If you run Kubernetes in the cloud, the first major vulnerability found isn't a huge issue

https://www.zdnet.com/article/kubernetes-first-major-security-hole-discovered/

It's a pretty big deal and quite scary, but patches were immediately available upon disclosure. What's even better is that the managed Kubernetes services running onAWS, Azure and Google Cloud Platform have all been patched already. If you're managing your own K8s clusters, however, you need to patch it yourself, which just takes time and know-how.

In my eyes, this is another data point that shows how proper use of cloud resources can be extremely beneficial to a company. Specifically, the big cloud players, especially AWS, are very similar to a highly competent and agile outsourced IT department. They have offerings that are years ahead of services that you would want to have onsite, and they've got testing methodologies in place to ensure that they're available 99.9% of the time.

It's true that there can be some issues in moving to the cloud, but many of the problems of the past now have very robust solutions that are included in the offerings. And those offerings are available on a pay-as-you-go basis in many cases. So you can easily keep tabs on exactly how much you're spending even on a per-application basis.

To ensure a successful digital transformation, contact us to get the experienced help that will put you on the right path.

Monday, November 26, 2018

Every enterprise is already using serverless applications in some form or another

You essentially have no insight into how the Results are generated by the "cloud" you're accessing via IP address or hostname. So you're accessing a service, but the actual server part of that interaction is abstracted from you.

Here's a great article on the concept of "Servicefull Serverless" to go into more detail about this:

https://www.infoq.com/articles/serverless-sea-change

Now, the current definition of "serverless" leverages all kinds of possible technologies like AWS Lambda or Whisk or even Cloudflare Isolates, on top of containers and Kubernetes running in VMs (or bare iron in the case of Isolates). So it's extremely important for you to understand those components at some point, but from your view as a consumer, you're already using serverless technology.