Friday, November 17, 2017

An updated version of MxLoader is available

https://www.ibm.com/developerworks/community/groups/service/html/communityview?communityUuid=220c5757-ac28-4f25-bd08-457c5a3364c3#fullpageWidgetId=W9cbbfec147e6_4341_9578_7671e722619f&file=31d8347f-219f-45b2-a1d2-18f777e38810

Friday, November 3, 2017

Canada's Secret Spy Agency Has Open-Sourced a Malware-Fighting Tool

https://bitbucket.org/cse-assemblyline/

All of the details about the release can be found here:

http://www.cbc.ca/news/technology/cse-canada-cyber-spy-malware-assemblyline-open-source-1.4361728

Every IT department in the world should download and use this if they don't already have something in place.

Thursday, November 2, 2017

We're a sponsor at Pink18 in Orlando!

Tuesday, October 24, 2017

How Netcool Operations Insight delivers cognitive automation by Kristian Stewart

https://www.ibm.com/blogs/cloud-computing/2017/08/netcool-operations-insight-cognitive-automation/

One important topic that Kristian omitted from his excellent article is the optional Agile Service Manager (ASM) component of NOI. ASM provides a context aware topology view of your applications and infrastructure, which gives you a clear view of the impacts causes by events. Take a look at our other articles and YouTube videos for more information on ASM.

Friday, October 13, 2017

What to use instead of ITMSuper

https://www.ibm.com/developerworks/community/blogs/0587adbc-8477-431f-8c68-9226adea11ed/entry/Helping_us_help_you_ITM_Bitesize_Edition_ITMSuper?lang=en

Wednesday, October 11, 2017

Free mobile app for monitoring the status of your Maximo and ICD environments

https://www.ibm.com/us-en/marketplace/mxadmin

It was written by A3J Group and appears to have some pretty nice functionality.

Wednesday, September 20, 2017

IBM Control Desk 7.6.0.3 is available

Introduction

IBM has released the ICD 7.6.0.3 FixPack:https://www-945.ibm.com/

Installation issues

New/Updated Functionality

Service Portal

Update 9/25/17: Control Desk Platform

Monday, September 11, 2017

Force change of global system property in Maximo

UPDATE 6/3/2020

Introduction

Problem

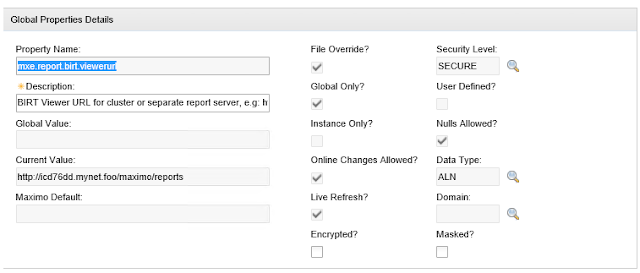

http://myhostname.domain.name/maximo/reports/ , and that is an invalid value. This system property should either be unset or set to

http://myhostname.domain.name/maximo/report (with no trailing "s"). The problem that this causes is that any attempt to click on the "Run reports" action gives an HTTP 404 error.

My "solution"

All* reports?

Tuesday, September 5, 2017

Disabling IE Enhanced Security Mode on Windows 2012 Server

function Disable-IEESC

{

$AdminKey = "HKLM:\SOFTWARE\Microsoft\Active Setup\Installed Components\{A509B1A7-37EF-4b3f-8CFC-4F3A74704073}"

$UserKey = "HKLM:\SOFTWARE\Microsoft\Active Setup\Installed Components\{A509B1A8-37EF-4b3f-8CFC-4F3A74704073}"

Set-ItemProperty -Path $AdminKey -Name "IsInstalled" -Value 0

Set-ItemProperty -Path $UserKey -Name "IsInstalled" -Value 0

Stop-Process -Name Explorer

Write-Host "IE Enhanced Security Configuration (ESC) has been disabled." -ForegroundColor Green

}

Disable-IEESC

Friday, August 18, 2017

A new IBM Redbook on writing applications with Node.JS, Express and AngularJS

http://www.redbooks.ibm.com/redbooks/pdfs/sg248406.pdf

It describes the process on BlueMix, but it is applicable to a local application also.

What I like about it is the intricate detail it goes into for each and every step of the process and line of code in the application. It includes a ton of details about exactly what is going on with each step. If you're just learning these technologies or want a primer, this is an extremely informative resource.

Monday, July 31, 2017

Debugging Remote Control in IBM Control Desk

Introduction

One of the many great features in IBM Control Desk is the ability to have a service desk agent remotely take control of a user's machine for troubleshooting (or repair) purposes. This function leverages the IBM BigFix for Remote Control agent on the target machine and a JNLP file on the server that launches a JAR file on the agent's machine.Architecture

The architecture is fairly simple. The JAR file running on the agent's machine communicates DIRECTLY with the BigFix Remote Control agent on the user's machine, which listens by default on port 888. This means that any firewalls between the agent's machine and the user's machine must allow a connection to port 888 on the user's machine.Installing the Agent on the User's Machine

If you manually install the agent, it prompts you for the server name and port, but these values are ignored if you don't have BigFix in your environment. So if you don't have BigFix in your environment, these two values can be anything you want - it doesn't matter. It also asks you for the port that the agent should listen on. This is 888 by default, but can be changed to anything you'd like.Launching the Controller Interface in debug mode on the Agent's Machine

Thursday, July 20, 2017

DevOps and Microservices Architecture done right - IBM Netcool Agile Service Manager

Introduction

Our last article described just how easy it is to upgrade any or all of the components of Agile Service Manager. This article is meant to describe some of the design, patterns and processes that had to go into the application itself to allow a two-command in-place upgrade.Microservices Architecture

Containers vs VMs

Containers vs J2EE Applications in an App Server

IBM's Design Choices

What does this have to do with DevOps?

Wednesday, July 19, 2017

IBM Agile Service Manager application maintenance is very easy

So now that I've got a technical audience, here's the amazing thing:

I just received some updated ASM components from IBM. To install them took TWO COMMANDS:

yum install *.rpm

docker compose up -d

THAT'S IT, and the new components are up and running, with the new functionality. I didn't even have to manually stop or start any processes. It was literally THOSE TWO COMMANDS. This, to me, is absolutely stunning, and hopefully a sign of more good things to come.

Wednesday, July 12, 2017

Using IBM Agile Service Manager and BigFix to obtain and display application communication topology data

Background

We've been working with a client who owns BigFix and Netcool Operations Insight, and who recently purchased the optional Agile Service Manager component of NOI. Up until now, we've been helping this customer obtain communication data (network/port/process connection information) in their environment through BigFix. A valid question you may have is: Doesn't TADDM do that and more? And the answer is yes it does, but the customer has some fairly severe obstacles that prohibit a successful deployment of TADDM.Why are we doing this?

How are we doing it?

The first challenge was getting the communication information via BigFix. With just a little searching, we realized that this was actually very easy. The 'netstat' command in both Windows and Linux will actually show you information about which ports are owned by/in use by which processes, and then it's just a matter of getting more details about each PID. Linux has the 'ps' command, and Windows PowerShell does too, though the output is different, of course. We also found that PowerShell has a few functions that will directly convert command output into XML. This is important because BigFix includes an XML inspector that lets you report on data that's in an XML file. On Linux, a little Perl scripting was used to accomplish the same goal.So with the IP/port/process information in had, we then needed to display that data in the ASM Topology Viewer. To do that, we used the included File Observer. Specifically, we wrote a script to create the appropriate nodes and edges so that this information can be displayed by ASM.

What's it look like?

Conclusion

Thursday, July 6, 2017

A Windows command similar to awk

https://ss64.com/nt/for_cmd.html

My main use for the awk command in *NIX is to pull out some piece of a line of text. I know awk is MUCH more powerful and even has its own robust language, but I've always used it to pull pieces of text out of structured output. And that's what FOR /F does for you. The syntax is completely different, but the capability is there and it's quite powerful.

Friday, June 30, 2017

Now you can get started with Artificial Intelligence on a Raspberry Pi

Thursday, June 29, 2017

More IBM Netcool Agile Service Manager Videos

https://www.youtube.com/playlist?list=PLxv2WlaeOSG9z_L4LCjHzz-qnZ-vDqnjn

Have fun

Tuesday, June 27, 2017

IBM Netcool Agile Service Manager - What is swagger?

Introduction

What is swagger?

Swagger URLs for ASM

Topology Service

Try it out and have fun

Monday, June 26, 2017

Agile Service Manager UI Introduction

IBM Netcool Agile Service Manager Thoughts

What is Agile Service Manager?

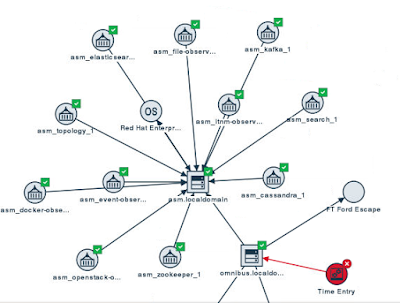

Basically, it's a real-time topology viewer for multiple technologies. Specifically, it can currently render topology data for ITNM, OpenStack and Docker, all in one place. Additionally, it maps events to the topology so you can see any events that are affecting a resource in the context of its topology. So, for example, if you receive a CRITICAL event for a particular Docker container, you will see the node representing that container turn red. Pretty neat. Here's an example of a 1-hop topology of my ASM server's docker infrastructure (you always have to start at some resource to view a topology):

What's so great about it?

Combined Topology View

First, this topology view is wonderful for Operations and Development because it shows a topology view of your combined Network, Docker and Openstack environments, so everyone can see where applications are running and the dependencies among the pieces.ElasticSearch

Second, it's got ElasticSearch under the covers, so updates and searches are amazingly fast, and the topology view is built extremely quickly.Custom Topology Information

Third, you can add your own topology information to make it even more useful!Here's a screenshot where I've manually modified the topology using a combination of the File Observer and direct access to the Topology Service REST API (from the Swagger URL):

Notice also that Time Entry is in a Critical state. That's due to an event that I generated.

History

Fourth, it maintains history about the topology. That means that you can view the difference in topology between 2 hours (or two days) ago and right now.Is ASM a complete replacement for TBSM and/or TADDM?

No, ASM is not a complete replacement for TBSM or TADDM, but you can definitely think of it as "TBSM Lite". TBSM still has some very unique features, such as status propagation, service rules, and custom KPIs that can be defined on a per-business-service basis.

And TADDM's unique capability is the hard work of actually discovering very detailed data and relationships in your environment.

However, because the search and visualization pieces of ASM are so fast and efficient, I can definitely see ASM being used as at least part of the visualization portion of TADDM. What would be required to allow this is a TADDM Observer to be written.

Additionally, I think the ASM database and topology will in the future be leveraged by TBSM, though this will take a little work.

Parting thoughts

Thursday, May 25, 2017

New Linux Samba vulnerability and fix

https://www.samba.org/samba/security/CVE-2017-7494.html

The workaround is easy and is contained in the link above:

in your /etc/samba/smb.conf file, add the following in the [global] section:

nt pipe support = no

Then restart smbd with 'service smb restart'

Monday, April 24, 2017

BMXAA7025E and BMXAA8313E Errors running MAXINST on ICD 7.6

https://www.ibm.com/developerworks/community/wikis/home?lang=en#!/wiki/Anything%20about%20Tivoli/page/To%20load%20the%20sample%20DB2%20database%20after%20Control%20Desk%207.6%20installed

But I didn't find those steps before I started, so I took my own path.

Specifically, I didn't drop the database, and that meant that I encountered errors BMXAA7025E and BMXAA8313E when running the 'maxinst.sh' script. What I found is that the cleandb operation doesn't really delete all of the tables and views in the MAXIMO schema (I'm on DB2/WebSphere/RHEL 6.5), so when maxinst gets to running the files under:

/opt/IBM/SMP/maximo/tools/maximo/en/dis_cms

It fails because a few of these SQL files try to create tables and views that still exist. I found this link about the problem:

https://www-01.ibm.com/support/docview.wss?uid=swg21647350

But I didn't like it because it tells you to re-create the database. So with a little digging, I found that after I hit the error, I could run the following db2 commands to delete all of the tables and views that were not automatically deleted:

db2 connect to maxdb76 user maximo using passw0rd

db2 DROP TABLE ALIASES

db2 DROP TABLE ATTRIBUTE_TYPES

db2 DROP TABLE BNDLVALS

db2 DROP TABLE BUNDLENM

db2 DROP TABLE CDM_VERSION

db2 DROP TABLE CHANGE_EVENTS

db2 DROP TABLE CLASS_TYPES

db2 DROP TABLE CMSTREE

db2 DROP TABLE CMSTREES

db2 DROP TABLE DESIRED_SUPPORTED_ATTRS

db2 DROP TABLE DESIRED_SUPPORTED_MAP

db2 DROP TABLE ENUMERATIONS

db2 DROP TABLE FTEXPRSN

db2 DROP TABLE FTVALUES

db2 DROP TABLE INTERFACE_TYPES

db2 DROP TABLE LAPARAMS

db2 DROP TABLE LCHENTR

db2 DROP TABLE LCHENTRY

db2 DROP TABLE ME_ATTRIBUTES

db2 DROP TABLE METADATA_ASSN

db2 DROP TABLE MSS

db2 DROP TABLE MSS_ME

Monday, April 3, 2017

DevOps: Operations Can't Fail

For a recent example, just look at the recent AWS outage:

http://www.recode.net/2017/3/2/14792636/amazon-aws-internet-outage-cause-human-error-incorrect-command

That was caused by someone debugging an application. None of us want our Operations department to be in that position, but it can obviously happen. I think there are one or more reasons behind why it happened, and I've got some opinions on how we need to work to ensure it doesn't happen to us:

Problem: Developers think Operations is easy

One Solution: We need to learn about "the new stuff"

Problem: Developers think Operations is unnecessary

One Solution: After learning the new stuff, ask to be involved

A great graphic from Ingo Averdunk at IBM

There are other problems and other solutions

Friday, March 31, 2017

DevOps: The functions that must be standardized among different applications

Why?

Business Continuity

Integration With Other Applications

Logging

Monitoring

Event Management

Notification

Runbook Automation

Authentication

Conclusion

Monday, March 20, 2017

Come by booth 568 at #IBMInterConnect to demystify DevOps from an Operations perspective

There is a LOT of chatter about DevOps, but all of it seems to leave Operations almost completely out of the picture. Come to our booth to get our take on DevOps including:

- DevOps tries to encourage Development to do *some* amount of automation and monitoring.

- Your Operations department needs to provide Dev teams with policies for integrating their apps into your monitoring and event management system.

- Your Operations department needs to learn a little about software development so you can help educate your Enterprise on exactly how DevOps can fit into your environment.

- Your Operations department needs to learn enough about Agile (specifically Scrum and Kanban) to participate in relevant conversations when the topics arise.

- and more.

Saturday, March 18, 2017

We're heading to #IBMInterConnect in Vegas

Thursday, February 23, 2017

Visit us at booth 568 at IBM InterConnect March 19-23 in Las Vegas

Stop by booth S568 in the Hybrid Cloud area to talk to us about:

- Our recent and historical successes helping customers like you deploy IBM products.

- IBM's comprehensive suite of ITSM tools, including Netcool, IBM Control Desk, IBM Performance Management, and TADDM.

- How you can effectively use an Agile methodology in your journey to realizing DevOps.

- Different strategies for effective deployments.

- Effectively consolidating and integrating your existing toolsets to your best advantage.

and many more topics!

Thursday, February 9, 2017

How to start a Netcool OMNIbus implementation

https://www.ibm.com/mysupport/s/question/0D50z00006LMPab/how-to-start-implementation-of-tivoli-omnibus?language=en_US

With such an open-ended question, I'm going to provide links that start at the very beginning - Event Management. IBM has a great Redbook on this topic. It's from 2004, but the foundational information is still completely valid:

http://www.redbooks.ibm.com/redbooks/pdfs/sg246094.pdf

It's a REALLY good reference, particularly chapters 1 and 2. Once you understand Event Management concepts, reasons, challenges, needs and personas, I think you then need to move on to information about the OMNIbus components, architecture and capabilities, which you can find in the product documentation here:

Then keep on reading through the rest of the product documentation so you understand how OMNIbus is basically configured.

The next topic you'll want to look at is probes. which will process data and send events to OMNIbus, and this information is also in the product documentation:

Next you'll probably want to dive into ObjectServer SQL to find out how to manage the events that probes generate:

You should probably also look at the links listed here:

https://www.ibm.com/developerworks/community/wikis/home?lang=en

Somewhere in here, you'll also need to determine if you're going to use Netcool Impact (most new customers purchase both products in some combination). And if so, you start poking around the Impact Wiki:

https://www.ibm.com/developerworks/community/wikis/home?lang=en

Automated testing for IBM Control Desk

https://www.ibm.com/developerworks/community/forums/html/topic?id=4d90a532-31a3-41bd-a128-2186fdae50b8

More information about Selenium itself can be found here:

http://www.seleniumhq.org/

IBM uses Selenium in several tools, including IBM Performance Manager and IBM Application Performance Manager. Essentially, it's used for recording and playing back web browser interactions.

Thursday, January 5, 2017

Maximo: How to view data from an arbitrary table

Mainly, follow the thorough instructions found here:

http://maximobase.blogspot.com/2013/05/how-to-create-custom-dialog-box-in.html

The parts of interest are:

In the dialog element, specify the appropriate mboname:

<dialog id="Testing" mboname="WARRANTYVIEW" label="Contract financial info" >

In this example, the MBO is "WARRANTYVIEW".

Also, you need to specify your MBO's attributes in with the "dataatribute" attribute of each appropriate control:

<textbox id="finaninfo_grid_s1_1"dataattribute="totalcost" />

In this case, "totalcost" is the name of the attribute that will be displayed. Yours will be different.

And that's it for my usecase. The MBO used by the dialog doesn't have to have any relationship to the main MBO attached to the application.