Tuesday, October 16, 2018

You can now use Vega to create custom graphs in Kibana

https://www.youtube.com/watch?v=lQGCipY3th8

Monday, October 15, 2018

IBM Announces Multicloud Manager

https://www.ibm.com/cloud/multicloud-manager

It allows you to manage containers across all the biggest cloud providers.

You can now see your LinkedIn saved articles on the desktop!

https://www.linkedin.com/feed/saved-articles/

To save an article, you should see a little bookmark icon under all articles. Click that, and you'll get a DIC popup that tells you it's saved and give you a link to view all of your saved articles.

Tuesday, October 9, 2018

ITMSuper is in a new location

https://www-01.ibm.com/marketing/iwm/iwm/web/pickUrxNew.do?source=tivopal

If you're an ITM 6.x user and you haven't used this tool, you really should download it to help with the management of your environment.

Wednesday, October 3, 2018

Learning neat new things at the Splunk Conference

Splunk is introducing tons of great new features at their conference this year. Many customers complain about the cost of Splunk, but you can lower that cost by leveraging the data to cover more usecases. If you feel like you're not getting the most out of Splunk, give us a call to get some help.

Tuesday, September 18, 2018

IBM just released the TBSM 6.2 Install Guide

https://www.ibm.com/developerworks/community/blogs/7d5ebce8-2dd8-449c-a58e-4676134e3eb8/entry/TBSM_6_2_Installation_Step_by_step_example_with_all_the_prerequisites_installation_and_configuration_steps?lang=en_th

Monday, September 17, 2018

Maximo 7.6.1 includes entitlement for Cognos Analytics 11

https://www.youtube.com/playlist?list=PLOBy7UFdPupclhOayxt8jrebiSZqZiv3R

Thursday, September 6, 2018

Some of our current projects

ServiceNow Architecture and Implementation

We're working with a communications company to implement their procurement, installation and change processes within ServiceNow, with asset feeds from multiple external systems.ServiceNow Incident Response integration with QRadar

We're helping our client customize both products and the integration between them to best leverage their existing investment and people.IBM Control Desk for Field Service Management

Netcool Operations Insight Implementation

BigFix Steady State

ICD and BigFix Implementation with Airgap

Friday, August 31, 2018

The latest version of OpenStack (Rocky) can leverage bare metal servers directly

https://www.zdnet.com/article/new-openstack-cloud-release-embraces-bare-metal/

Now you can provision bare metal servers through OpenStack. The linked article describes some of the use cases, and provides additional links to more information.

Wednesday, August 29, 2018

ServiceNow - requiring input from a user completing a task from workflow

Background

A normal part of workflow is requiring some additional information from someone involved in the workflow. A lot of information can be captured automatically, but there often seems to be some information that must be input manually by someone simply because not everything can be determined within an algorithm. This may be because the information is maintained in a separate, walled-off system, or it could be because the sensors required to gather the information aren't yet deployed, etc.In ServiceNow IT Operations Management, you have this ability Out-of-the-box when dealing with Service Catalog Items. A Service Catalog Item is also known as a Requested Item or an RITM. Specifically, you can define Variables that are associated with an RITM, and those Variables are then available for use within any Catalog Tasks that you create within the workflow for that RITM. Without a little customization, this feature is ONLY available within workflows that target the sc_req_item table. So if you require some generic user input as part of a change task, for example, you need to perform the customizations detailed here.

Here is a link to a great article on this very topic. That post is a little old (from 2010), so the information is a bit dated and very terse. I'm writing this article to update the information and to clarify a few pieces to make it clearer.

I suggest you read through these instructions once or twice before you start trying to follow them. Then re-read them as you're implementing them. The pieces will come together for you at some point, just probably not immediately unless you've worked on this area of ServiceNow a bit.

What's already in place?

"Label [actual_table_name]")

Note: Catalog Items already use both the question and question_answer tables to store the Variables (options) that can be specified for each item.

Areas that need to be customized

1. Workflow->Administration->Activity Definitions

Define new variable

Edit the script

Edit the Create Task form

2. System UI->Formatters

3. Change Task default view

4. Service Catalog->Catalog Variables->All Variables

5. Workflow->Workflow Editor

You can click the padlock icon to open it, then click the magnifying glass to search for your questions:

You'll see ALL of the variables/questions in the questions table. Pick the ones you want displayed to the person assigned the task.

The Result

Conclusion

Tuesday, August 14, 2018

The Lenovo P1 is a thin-and-light laptop with a Xeon and 64GB RAM that will be available by September

Additionally, they've got a P72 that will support up to 128GB RAM coming out at the same time.

More memory, storage and power is a great thing.

Wednesday, July 11, 2018

Processing JSON in automation scripts in IBM Control Desk 7.6

Background

You may need to deal with JSON-formatted data in an automation script, and it can be a little tricky. I've written this post to provide the few little pointers to make it easier for you.JavaScript

Jython in WebSphere

Jython in WebLogic

Tuesday, July 10, 2018

It only takes an hour to get a test BigFix environment installed and working

Monday, July 9, 2018

How to change the BigFix WebUI database userid and password

C:\Program Files (x86)\BigFix Enterprise\BES WebUI\WebUI

However, this is what the contents of that file are:

{"user":"96\u002fzY1rPfE40v69uFttQAg==","password":"MwKBDmT00BEwEZm1ctZahg==","hostname":"WIN-5M6866TPST1.mynet.foo","port":1433}

And while those look like Base64 encoded values, there's also some encryption going on (try putting either of those strings through an online Base64 encoder/decoder and you'll see).

So the first thing I tried was to just put the information in the file in cleartext and restart the WebUI service, so the file looked like:

{"user":"sa","password":"passw0rd","hostname":"WIN-5M6866TPST1.mynet.foo","port":1433}

Amazingly, that worked, and here's the logfile entry that shows it:

Wed, 04 Jul 2018 13:14:24 GMT bf:dbcredentials-error Failed to decrypt database credentials, attempting to use inputted credentials as plaintext

Friday, July 6, 2018

For business use, don't buy a laptop with higher than 1080p resolution

The higher end business laptops (Lenovo Thinkpad T, P or X series; Dell XPS; etc.) generally offer a 1920x1080 pixel option as a base, then higher resolutions and touchscreens cost more. In my experience, you'll be the happiest with the lower cost 1920x1080 option. Whether you get a touch-enabled screen or not is up to you, but definitely skip the high resolution screen.

Wednesday, June 27, 2018

Just Announced: IBM Cloud App Management

https://developer.ibm.com/apm/2018/06/26/introducing-ibms-new-service-management-cloud-native-offering-ibm-cloud-app-management/

Some of the highlights are that it runs on IBM Cloud Private (so it runs in containers orchestrated by Kubernetes) and supports both ITM v6 and APM v8 agents.

Monday, June 25, 2018

Reading and writing files in a Maximo automation script

Background

All of the product documentation tells you to use the product provided logging for debugging automation scripts (see here, for example: https://www.ibm.com/support/knowledgecenter/SSZRHJ/com.ibm.mbs.doc/autoscript/c_ctr_auto_script_debug.html ). For quick debugging, however, I thought that was cumbersome, so I decided to figure out how to access files directly from within an automation script. This post goes over exactly what's required to do that. Maximo supports Jython and Rhino-JavaScript for automation scripting, and I'll cover both of those here.Jython

Rhino-JavaScript

Friday, June 15, 2018

ICD 7.6 Fresh install and config with LDAP authentication configured will fail

Apply Deployment Operations-CTGIN5013E: The reconfiguration action deployDatabaseConfiguration failed. Refer to messages in the console for more information.

And if you look in the CTGConfigurationTrace<datetime>.log file, you'll see this error:

SEVERE: NOTE ^^T^Incorrect syntax near the keyword 'null'.

com.microsoft.sqlserver.jdbc.SQLServerException: Incorrect syntax near the keyword 'null'.

at com.microsoft.sqlserver.jdbc.SQLServerException.makeFromDatabaseError(SQLServerException.java:216)

at com.microsoft.sqlserver.jdbc.SQLServerStatement.getNextResult(SQLServerStatement.java:1515)

at com.microsoft.sqlserver.jdbc.SQLServerPreparedStatement.doExecutePreparedStatement(SQLServerPreparedStatement.java:404)

at com.microsoft.sqlserver.jdbc.SQLServerPreparedStatement$PrepStmtExecCmd.doExecute(SQLServerPreparedStatement.java:350)

at com.microsoft.sqlserver.jdbc.TDSCommand.execute(IOBuffer.java:5696)

at com.microsoft.sqlserver.jdbc.SQLServerConnection.executeCommand(SQLServerConnection.java:1715)

at com.microsoft.sqlserver.jdbc.SQLServerStatement.executeCommand(SQLServerStatement.java:180)

at com.microsoft.sqlserver.jdbc.SQLServerStatement.executeStatement(SQLServerStatement.java:155)

at com.microsoft.sqlserver.jdbc.SQLServerPreparedStatement.executeQuery(SQLServerPreparedStatement.java:285)

at com.ibm.tivoli.ccmdb.install.common.util.CmnEncryptPropertiesUtil.init(CmnEncryptPropertiesUtil.java:187)

at com.ibm.tivoli.ccmdb.install.common.util.CmnEncryptPropertiesUtil.<init>(CmnEncryptPropertiesUtil.java:101)

at com.ibm.tivoli.ccmdb.install.common.util.CmnEncryptPropertiesUtil.getInstance(CmnEncryptPropertiesUtil.java:141)

at com.ibm.tivoli.ccmdb.install.common.config.database.ACfgDatabase.createCronTask(ACfgDatabase.java:1391)

at com.ibm.tivoli.ccmdb.install.common.config.database.CfgEnableVMMSyncTaskAction.performAction(CfgEnableVMMSyncTaskAction.java:140)

at com.ibm.tivoli.ccmdb.install.common.config.database.ACfgDatabase.runConfigurationStep(ACfgDatabase.java:1108)

at com.ibm.tivoli.madt.reconfig.database.DeployDBConfiguration.performAction(DeployDBConfiguration.java:493)

at com.ibm.tivoli.madt.configui.config.ConfigureSQLServer.performConfiguration(ConfigureSQLServer.java:75)

at com.ibm.tivoli.madt.configui.common.config.ConfigurationUtilities.runDatabaseConfiguration(ConfigurationUtilities.java:540)

at com.ibm.tivoli.madt.configui.bsi.panels.deployment.TpaeDeploymentPanel$RunOperations.run(TpaeDeploymentPanel.java:1550)

at org.eclipse.jface.operation.ModalContext$ModalContextThread.run(ModalContext.java:121)

Wednesday, June 13, 2018

JD-Gui is an invaluable tool for troubleshooting Java applications

All of that information came from just dropping the JAR file onto JD-Gui.

The problem I'm encountering is a SQL error complaining about a syntax error near the keyworkd "null". By looking at the trace file produced and the source code, I've been able to reproduce the exact error message, and I'm 100% confident I know exactly in the code where the error is generated. So instead of just randomly trying different possible solutions, I can focus on the very small number of areas that could be causing this particular problem.

I've been using this tool for years, so I'm not sure what took me so long to write about it.

Monday, June 11, 2018

I definitely recommend installing Linux on Windows

The Ubuntu distribution available in the MS Store even comes with vi with color highlighting for known file types (like html or js), and it's got telnet, ssh, sftp, etc. to make your life easy.

It's been available for a while, and I was hesitant to install it, but now I'm very happy I did.

Friday, June 1, 2018

Amazon Chime is a cheaper and more powerful alternative to WebEx

https://aws.amazon.com/chime/

and I can report that it's just as reliable and easy as WebEx, but with more capabilities and at a fraction of the cost. Specifically, it's only a maximum of $15 per month per host, with 100 attendees allowed, plus you get a dial-in number (an 800 number is available, but there are additional per-minute charges associated with it).

We had an older WebEx account that was $50 per host per month, so I was very happy to run across this service and to get a minimum of a 70% savings. I say minimum of 70% savings because some of our host accounts were used only at most 2 days per month, which, with Chime, will now only cost a maximum of $6 per month.

High Availability for DB2 on AWS

https://aws.amazon.com/blogs/database/creating-highly-available-ibm-db2-databases-in-aws/

If you're concerned about running your infrastructure in the cloud, please contact us so we can give you the information you need about the tight security and incredible flexibility that AWS provides.

Monday, February 26, 2018

Netcool and other IBM ITSM products upgrades due to Java6 EOS

Problem

IBM has announced End of Support dates for quite a few products in 2018. In many cases, this stems from the impending end of support for Java 1.6. You can search for IBM products and the EOS date here: https://www-01.ibm.com/software/support/lifecycle/Solution

Gulfsoft Consulting can help you move to a supported release in a short time period, or we can get you upgraded to a product with more features (like moving to NOI from Omnibus). We have helped hundreds of clients over the years upgrade and migrate in situations exactly like this. The typical time needed is a few weeks, not months. For more information contact:frank.tate@gulfsoft.com 304 376 6183

mark.hudson@gulfsoft.com 816 517 7179

Details

Some of the products whose support ends in 2018 are:| Product | Version | EOS Date |

| IBM Tivoli Monitoring | 6.2.2 | 4/30/2018 |

| IBM Control Desk | 7.5.x | 9/30/2018 |

| Tivoli Workload Scheduler | 8.6.x | 4/30/2018 |

| Netcool Operations Insight | 1.2, 1.3.x | 12/31/2018 |

| Network Mgmt | 9.2.x | 12/31/2018 |

| OMNIbus | 7.4.x | 12/31/2018 |

| Impact | 6.1.x | 12/31/2018 |

| IBM Tivoli Network Manager | 3.9.x, 4.1.x | 12/31/2018 |

| Netcool Performance Manager | 1.3.x | 12/31/2018 |

| Netcool Performance Flow Analyzer | 4.1.x | 12/31/2018 |

| Network Configuration Manager | 6.3.x, 6.4.0, 6.4.1 | 12/31/2018 |

IBM recommends upgrading to later versions of the products as soon as possible in order to maintain full support. After April 2018, support for Java™ 6 will be limited to usage and known problems with possible updates for critical security fixes through the end of 2018. After April 2018, WebSphere Application Server (WAS) 7 support will be limited to non-Java defects. Support for other components will continue as usual.

More information about Gulfsoft can be found here: https://www.gulfsoft.com/about

Friday, February 23, 2018

We've got a few open time slots for one-on-one meetings at #Think2018

Monday, February 19, 2018

#Pink18 is off to a Great Start

The #Pink18 ITSM conference kicked off last night with a reception, and it looks to be another great conference this year. Pink Elephant always has great thought leaders presenting at the sessions, and this year will continue that tradition.

If you're at the conference, please stop by our booth, #601, in the exhibitors showcase.

Friday, January 19, 2018

IBM Maximo named a Leader in Gartner Magic Quadrant for Enterprise Asset Management!

Something very important to note is that the IBM Control Desk product is built entirely on Maximo. So almost all of the features and capabilities that put Maximo into Gartner's Magic Quadrant for Enterprise Asset Management are also included in IBM Control Desk.

Tuesday, January 16, 2018

Gulf Breeze Software Partners is now Gulfsoft Consulting

aspirations to write software at some point. Along the way, we realized that we really prefer

implementing and customizing software over writing new applications from scratch. And while

we've advertised that we're specialists in the implementation and customization of the IBM suite

of products up to this point, we're now marketing the fact that we have experience in and offer

services on a much larger array of products from multiple vendors. To effectively market our

capabilities to new customers, we decided to change our name from Gulf Breeze Software Partners

to Gulfsoft Consulting. We've still got the same amazing people and the same drive to make customers

successful, and now we've got a name that more accurately describes what we do. Here’s a link to some of the technologies we work with every day.

Monday, January 8, 2018

We will be at multiple conferences this year: Pink Elephant's Pink18, IBM's Think 2018, and ServiceNow's Knowledge18

Pink18 Feb 18-21, 2018 at the JW Marriott Orlando, Grande Lakes

Think 2018 Mar 19—22, 2018 Las Vegas, NV

Knowledge18 May 7-10, 2018 Las Vegas, NV

Friday, November 17, 2017

An updated version of MxLoader is available

https://www.ibm.com/developerworks/community/groups/service/html/communityview?communityUuid=220c5757-ac28-4f25-bd08-457c5a3364c3#fullpageWidgetId=W9cbbfec147e6_4341_9578_7671e722619f&file=31d8347f-219f-45b2-a1d2-18f777e38810

Friday, November 3, 2017

Canada's Secret Spy Agency Has Open-Sourced a Malware-Fighting Tool

https://bitbucket.org/cse-assemblyline/

All of the details about the release can be found here:

http://www.cbc.ca/news/technology/cse-canada-cyber-spy-malware-assemblyline-open-source-1.4361728

Every IT department in the world should download and use this if they don't already have something in place.

Thursday, November 2, 2017

We're a sponsor at Pink18 in Orlando!

Tuesday, October 24, 2017

How Netcool Operations Insight delivers cognitive automation by Kristian Stewart

https://www.ibm.com/blogs/cloud-computing/2017/08/netcool-operations-insight-cognitive-automation/

One important topic that Kristian omitted from his excellent article is the optional Agile Service Manager (ASM) component of NOI. ASM provides a context aware topology view of your applications and infrastructure, which gives you a clear view of the impacts causes by events. Take a look at our other articles and YouTube videos for more information on ASM.

Friday, October 13, 2017

What to use instead of ITMSuper

https://www.ibm.com/developerworks/community/blogs/0587adbc-8477-431f-8c68-9226adea11ed/entry/Helping_us_help_you_ITM_Bitesize_Edition_ITMSuper?lang=en

Wednesday, October 11, 2017

Free mobile app for monitoring the status of your Maximo and ICD environments

https://www.ibm.com/us-en/marketplace/mxadmin

It was written by A3J Group and appears to have some pretty nice functionality.

Wednesday, September 20, 2017

IBM Control Desk 7.6.0.3 is available

Introduction

IBM has released the ICD 7.6.0.3 FixPack:https://www-945.ibm.com/

Installation issues

New/Updated Functionality

Service Portal

Update 9/25/17: Control Desk Platform

Monday, September 11, 2017

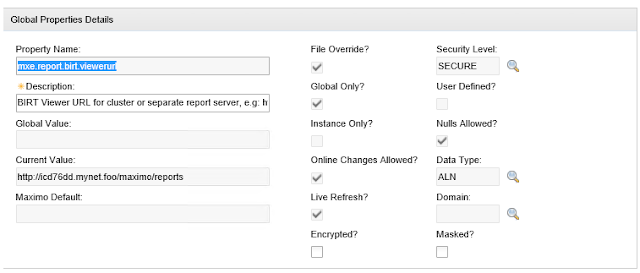

Force change of global system property in Maximo

UPDATE 6/3/2020

Introduction

Problem

http://myhostname.domain.name/maximo/reports/ , and that is an invalid value. This system property should either be unset or set to

http://myhostname.domain.name/maximo/report (with no trailing "s"). The problem that this causes is that any attempt to click on the "Run reports" action gives an HTTP 404 error.

My "solution"

All* reports?

Tuesday, September 5, 2017

Disabling IE Enhanced Security Mode on Windows 2012 Server

function Disable-IEESC

{

$AdminKey = "HKLM:\SOFTWARE\Microsoft\Active Setup\Installed Components\{A509B1A7-37EF-4b3f-8CFC-4F3A74704073}"

$UserKey = "HKLM:\SOFTWARE\Microsoft\Active Setup\Installed Components\{A509B1A8-37EF-4b3f-8CFC-4F3A74704073}"

Set-ItemProperty -Path $AdminKey -Name "IsInstalled" -Value 0

Set-ItemProperty -Path $UserKey -Name "IsInstalled" -Value 0

Stop-Process -Name Explorer

Write-Host "IE Enhanced Security Configuration (ESC) has been disabled." -ForegroundColor Green

}

Disable-IEESC

Friday, August 18, 2017

A new IBM Redbook on writing applications with Node.JS, Express and AngularJS

http://www.redbooks.ibm.com/redbooks/pdfs/sg248406.pdf

It describes the process on BlueMix, but it is applicable to a local application also.

What I like about it is the intricate detail it goes into for each and every step of the process and line of code in the application. It includes a ton of details about exactly what is going on with each step. If you're just learning these technologies or want a primer, this is an extremely informative resource.

Monday, July 31, 2017

Debugging Remote Control in IBM Control Desk

Introduction

One of the many great features in IBM Control Desk is the ability to have a service desk agent remotely take control of a user's machine for troubleshooting (or repair) purposes. This function leverages the IBM BigFix for Remote Control agent on the target machine and a JNLP file on the server that launches a JAR file on the agent's machine.Architecture

The architecture is fairly simple. The JAR file running on the agent's machine communicates DIRECTLY with the BigFix Remote Control agent on the user's machine, which listens by default on port 888. This means that any firewalls between the agent's machine and the user's machine must allow a connection to port 888 on the user's machine.Installing the Agent on the User's Machine

If you manually install the agent, it prompts you for the server name and port, but these values are ignored if you don't have BigFix in your environment. So if you don't have BigFix in your environment, these two values can be anything you want - it doesn't matter. It also asks you for the port that the agent should listen on. This is 888 by default, but can be changed to anything you'd like.Launching the Controller Interface in debug mode on the Agent's Machine

Thursday, July 20, 2017

DevOps and Microservices Architecture done right - IBM Netcool Agile Service Manager

Introduction

Our last article described just how easy it is to upgrade any or all of the components of Agile Service Manager. This article is meant to describe some of the design, patterns and processes that had to go into the application itself to allow a two-command in-place upgrade.Microservices Architecture

Containers vs VMs

Containers vs J2EE Applications in an App Server

IBM's Design Choices

What does this have to do with DevOps?

Wednesday, July 19, 2017

IBM Agile Service Manager application maintenance is very easy

So now that I've got a technical audience, here's the amazing thing:

I just received some updated ASM components from IBM. To install them took TWO COMMANDS:

yum install *.rpm

docker compose up -d

THAT'S IT, and the new components are up and running, with the new functionality. I didn't even have to manually stop or start any processes. It was literally THOSE TWO COMMANDS. This, to me, is absolutely stunning, and hopefully a sign of more good things to come.

Wednesday, July 12, 2017

Using IBM Agile Service Manager and BigFix to obtain and display application communication topology data

Background

We've been working with a client who owns BigFix and Netcool Operations Insight, and who recently purchased the optional Agile Service Manager component of NOI. Up until now, we've been helping this customer obtain communication data (network/port/process connection information) in their environment through BigFix. A valid question you may have is: Doesn't TADDM do that and more? And the answer is yes it does, but the customer has some fairly severe obstacles that prohibit a successful deployment of TADDM.Why are we doing this?

How are we doing it?

The first challenge was getting the communication information via BigFix. With just a little searching, we realized that this was actually very easy. The 'netstat' command in both Windows and Linux will actually show you information about which ports are owned by/in use by which processes, and then it's just a matter of getting more details about each PID. Linux has the 'ps' command, and Windows PowerShell does too, though the output is different, of course. We also found that PowerShell has a few functions that will directly convert command output into XML. This is important because BigFix includes an XML inspector that lets you report on data that's in an XML file. On Linux, a little Perl scripting was used to accomplish the same goal.So with the IP/port/process information in had, we then needed to display that data in the ASM Topology Viewer. To do that, we used the included File Observer. Specifically, we wrote a script to create the appropriate nodes and edges so that this information can be displayed by ASM.

What's it look like?

Conclusion

Thursday, July 6, 2017

A Windows command similar to awk

https://ss64.com/nt/for_cmd.html

My main use for the awk command in *NIX is to pull out some piece of a line of text. I know awk is MUCH more powerful and even has its own robust language, but I've always used it to pull pieces of text out of structured output. And that's what FOR /F does for you. The syntax is completely different, but the capability is there and it's quite powerful.

Friday, June 30, 2017

Now you can get started with Artificial Intelligence on a Raspberry Pi

Thursday, June 29, 2017

More IBM Netcool Agile Service Manager Videos

https://www.youtube.com/playlist?list=PLxv2WlaeOSG9z_L4LCjHzz-qnZ-vDqnjn

Have fun