Tuesday, June 27, 2017

IBM Netcool Agile Service Manager - What is swagger?

Monday, June 26, 2017

Agile Service Manager UI Introduction

IBM Netcool Agile Service Manager Thoughts

What is Agile Service Manager?

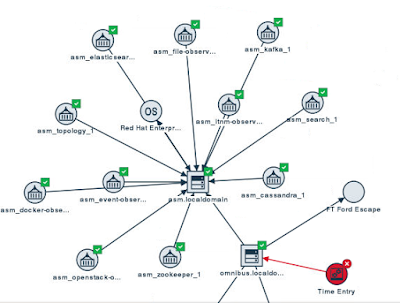

Basically, it's a real-time topology viewer for multiple technologies. Specifically, it can currently render topology data for ITNM, OpenStack and Docker, all in one place. Additionally, it maps events to the topology so you can see any events that are affecting a resource in the context of its topology. So, for example, if you receive a CRITICAL event for a particular Docker container, you will see the node representing that container turn red. Pretty neat. Here's an example of a 1-hop topology of my ASM server's docker infrastructure (you always have to start at some resource to view a topology):

What's so great about it?

Combined Topology View

First, this topology view is wonderful for Operations and Development because it shows a topology view of your combined Network, Docker and Openstack environments, so everyone can see where applications are running and the dependencies among the pieces.ElasticSearch

Second, it's got ElasticSearch under the covers, so updates and searches are amazingly fast, and the topology view is built extremely quickly.Custom Topology Information

Third, you can add your own topology information to make it even more useful!Here's a screenshot where I've manually modified the topology using a combination of the File Observer and direct access to the Topology Service REST API (from the Swagger URL):

Notice also that Time Entry is in a Critical state. That's due to an event that I generated.

History

Fourth, it maintains history about the topology. That means that you can view the difference in topology between 2 hours (or two days) ago and right now.Is ASM a complete replacement for TBSM and/or TADDM?

No, ASM is not a complete replacement for TBSM or TADDM, but you can definitely think of it as "TBSM Lite". TBSM still has some very unique features, such as status propagation, service rules, and custom KPIs that can be defined on a per-business-service basis.

And TADDM's unique capability is the hard work of actually discovering very detailed data and relationships in your environment.

However, because the search and visualization pieces of ASM are so fast and efficient, I can definitely see ASM being used as at least part of the visualization portion of TADDM. What would be required to allow this is a TADDM Observer to be written.

Additionally, I think the ASM database and topology will in the future be leveraged by TBSM, though this will take a little work.

Parting thoughts

Thursday, May 25, 2017

New Linux Samba vulnerability and fix

https://www.samba.org/samba/security/CVE-2017-7494.html

The workaround is easy and is contained in the link above:

in your /etc/samba/smb.conf file, add the following in the [global] section:

nt pipe support = no

Then restart smbd with 'service smb restart'

Monday, April 24, 2017

BMXAA7025E and BMXAA8313E Errors running MAXINST on ICD 7.6

https://www.ibm.com/developerworks/community/wikis/home?lang=en#!/wiki/Anything%20about%20Tivoli/page/To%20load%20the%20sample%20DB2%20database%20after%20Control%20Desk%207.6%20installed

But I didn't find those steps before I started, so I took my own path.

Specifically, I didn't drop the database, and that meant that I encountered errors BMXAA7025E and BMXAA8313E when running the 'maxinst.sh' script. What I found is that the cleandb operation doesn't really delete all of the tables and views in the MAXIMO schema (I'm on DB2/WebSphere/RHEL 6.5), so when maxinst gets to running the files under:

/opt/IBM/SMP/maximo/tools/maximo/en/dis_cms

It fails because a few of these SQL files try to create tables and views that still exist. I found this link about the problem:

https://www-01.ibm.com/support/docview.wss?uid=swg21647350

But I didn't like it because it tells you to re-create the database. So with a little digging, I found that after I hit the error, I could run the following db2 commands to delete all of the tables and views that were not automatically deleted:

db2 connect to maxdb76 user maximo using passw0rd

db2 DROP TABLE ALIASES

db2 DROP TABLE ATTRIBUTE_TYPES

db2 DROP TABLE BNDLVALS

db2 DROP TABLE BUNDLENM

db2 DROP TABLE CDM_VERSION

db2 DROP TABLE CHANGE_EVENTS

db2 DROP TABLE CLASS_TYPES

db2 DROP TABLE CMSTREE

db2 DROP TABLE CMSTREES

db2 DROP TABLE DESIRED_SUPPORTED_ATTRS

db2 DROP TABLE DESIRED_SUPPORTED_MAP

db2 DROP TABLE ENUMERATIONS

db2 DROP TABLE FTEXPRSN

db2 DROP TABLE FTVALUES

db2 DROP TABLE INTERFACE_TYPES

db2 DROP TABLE LAPARAMS

db2 DROP TABLE LCHENTR

db2 DROP TABLE LCHENTRY

db2 DROP TABLE ME_ATTRIBUTES

db2 DROP TABLE METADATA_ASSN

db2 DROP TABLE MSS

db2 DROP TABLE MSS_ME

Monday, April 3, 2017

DevOps: Operations Can't Fail

For a recent example, just look at the recent AWS outage:

http://www.recode.net/2017/3/2/14792636/amazon-aws-internet-outage-cause-human-error-incorrect-command

That was caused by someone debugging an application. None of us want our Operations department to be in that position, but it can obviously happen. I think there are one or more reasons behind why it happened, and I've got some opinions on how we need to work to ensure it doesn't happen to us:

Problem: Developers think Operations is easy

One Solution: We need to learn about "the new stuff"

Problem: Developers think Operations is unnecessary

One Solution: After learning the new stuff, ask to be involved

A great graphic from Ingo Averdunk at IBM

There are other problems and other solutions

Friday, March 31, 2017

DevOps: The functions that must be standardized among different applications

Why?

Business Continuity

Integration With Other Applications

Logging

Monitoring

Event Management

Notification

Runbook Automation

Authentication

Conclusion

Monday, March 20, 2017

Come by booth 568 at #IBMInterConnect to demystify DevOps from an Operations perspective

There is a LOT of chatter about DevOps, but all of it seems to leave Operations almost completely out of the picture. Come to our booth to get our take on DevOps including:

- DevOps tries to encourage Development to do *some* amount of automation and monitoring.

- Your Operations department needs to provide Dev teams with policies for integrating their apps into your monitoring and event management system.

- Your Operations department needs to learn a little about software development so you can help educate your Enterprise on exactly how DevOps can fit into your environment.

- Your Operations department needs to learn enough about Agile (specifically Scrum and Kanban) to participate in relevant conversations when the topics arise.

- and more.

Saturday, March 18, 2017

We're heading to #IBMInterConnect in Vegas

Thursday, February 23, 2017

Visit us at booth 568 at IBM InterConnect March 19-23 in Las Vegas

Stop by booth S568 in the Hybrid Cloud area to talk to us about:

- Our recent and historical successes helping customers like you deploy IBM products.

- IBM's comprehensive suite of ITSM tools, including Netcool, IBM Control Desk, IBM Performance Management, and TADDM.

- How you can effectively use an Agile methodology in your journey to realizing DevOps.

- Different strategies for effective deployments.

- Effectively consolidating and integrating your existing toolsets to your best advantage.

and many more topics!

Thursday, February 9, 2017

How to start a Netcool OMNIbus implementation

https://www.ibm.com/mysupport/s/question/0D50z00006LMPab/how-to-start-implementation-of-tivoli-omnibus?language=en_US

With such an open-ended question, I'm going to provide links that start at the very beginning - Event Management. IBM has a great Redbook on this topic. It's from 2004, but the foundational information is still completely valid:

http://www.redbooks.ibm.com/redbooks/pdfs/sg246094.pdf

It's a REALLY good reference, particularly chapters 1 and 2. Once you understand Event Management concepts, reasons, challenges, needs and personas, I think you then need to move on to information about the OMNIbus components, architecture and capabilities, which you can find in the product documentation here:

Then keep on reading through the rest of the product documentation so you understand how OMNIbus is basically configured.

The next topic you'll want to look at is probes. which will process data and send events to OMNIbus, and this information is also in the product documentation:

Next you'll probably want to dive into ObjectServer SQL to find out how to manage the events that probes generate:

You should probably also look at the links listed here:

https://www.ibm.com/developerworks/community/wikis/home?lang=en

Somewhere in here, you'll also need to determine if you're going to use Netcool Impact (most new customers purchase both products in some combination). And if so, you start poking around the Impact Wiki:

https://www.ibm.com/developerworks/community/wikis/home?lang=en

Automated testing for IBM Control Desk

https://www.ibm.com/developerworks/community/forums/html/topic?id=4d90a532-31a3-41bd-a128-2186fdae50b8

More information about Selenium itself can be found here:

http://www.seleniumhq.org/

IBM uses Selenium in several tools, including IBM Performance Manager and IBM Application Performance Manager. Essentially, it's used for recording and playing back web browser interactions.

Thursday, January 5, 2017

Maximo: How to view data from an arbitrary table

Mainly, follow the thorough instructions found here:

http://maximobase.blogspot.com/2013/05/how-to-create-custom-dialog-box-in.html

The parts of interest are:

In the dialog element, specify the appropriate mboname:

<dialog id="Testing" mboname="WARRANTYVIEW" label="Contract financial info" >

In this example, the MBO is "WARRANTYVIEW".

Also, you need to specify your MBO's attributes in with the "dataatribute" attribute of each appropriate control:

<textbox id="finaninfo_grid_s1_1"dataattribute="totalcost" />

In this case, "totalcost" is the name of the attribute that will be displayed. Yours will be different.

And that's it for my usecase. The MBO used by the dialog doesn't have to have any relationship to the main MBO attached to the application.

Friday, December 16, 2016

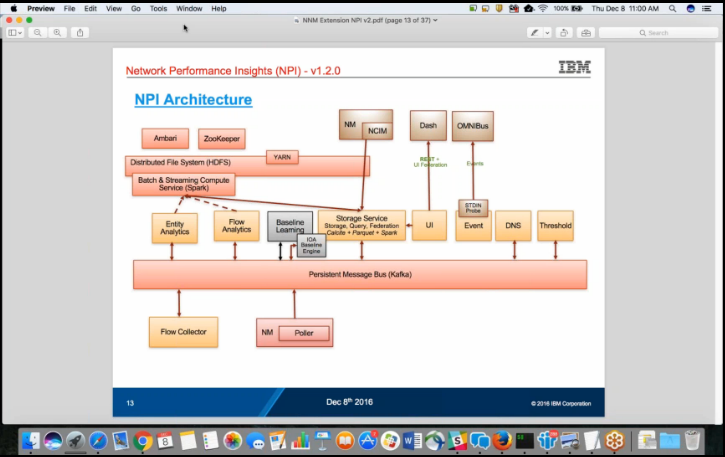

An awesome video on IBM Network Performance Insights 1.2

https://www.imwuc.org/p/do/sd/sid=3340&source=6

Here are a couple of screenshots to show you the kind of detail covered:

The speakers in the video are:

Krishna Kodali, Sr. Software Engineer, IBM - Krishna Kodali is a Senior Software Engineer at IBM, he provides support and consultation for Netcool Product Suite. He has been working with Netcool Product Suite since 2005 and is a worldwide Subject Matter Expert (SME) for IBM Tivoli Network Manager (ITNM). He offers guidance in design and implementation for any size of deployment. Krishna has a Bachelor’s degree in Engineering and is a Cisco Certified Network Professional (CCNP). He specializes in Network Technologies, System Management, IT Service Management, Virtualization, SNMP and Netcool.

John Parish, Technical Enablement Specialist, IBM - John Parish has been teaching IBM courses for the past 10 years.

Wednesday, November 16, 2016

PINK17 Feb 19-22, Las Vegas

Friday, November 4, 2016

IBM's Cloud Business Partner Advisory Council in New Orleans was amazing

Wednesday, October 5, 2016

Cloning Maximo 7.6

https://www.ibm.com/developerworks/community/blogs/a9ba1efe-b731-4317-9724-a181d6155e3a/entry/Cloning_Maximo_7_6?lang=en

In the comments you'll also find a link to this MSSQL script that Brian Baird uses to update several values after cloning:

https://www.ibm.com/developerworks/community/blogs/a9ba1efe-b731-4317-9724-a181d6155e3a/entry/Cloning_Maximo_7_6?lang=en

Tuesday, September 20, 2016

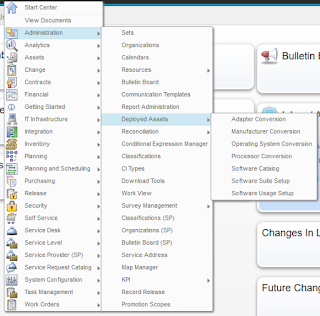

All of the applications included in IBM Control Desk for Service Providers 7.6 and 7.6.0.1

Friday, September 9, 2016

Adding an additional hostname to Maximo on WebSphere and IBM HTTP Server

To fix this problem, you need to add a Host Alias to each of the appropriate Virtual Hosts that you have defined for Maximo, then restart the application server(s). Here's how:

1. In my environment, I want to be able to access Maximo using the URL:

http://icdcommon/maximo

The two servers participating in my cluster are named icd1 and icd2. I'm adding an entry in my /etc/hosts (or \windows\system32\drivers\etc\hosts) file for icdcommon to be an alias for icd1.

(In a real environment, you would be modifying DNS appropriately).

2. Log into the WebSphere admin console at http://dmgr_host:9060/admin

3. Navigate to Environment->Virtual Hosts, where you will see multiple virtual hosts. I have the application configured in a cluster named MXCLUSTER, so the virtual hosts of interest to me are:

MXCLUSTER_host

default_host

webserver1_host

4. For each of the above Virtual Hosts, click on that host then click the Host aliases link.

5. Click the New button to add a new entry and in that entry, specify:

Host Name: icdcommon

Port: 80

6. Click OK, then click the save link at the top of the page (or you can wait until your done to click save).

7. Once you've done the above for each of the three Virtual Hosts, you need to restart all IBM HTTP Servers AND all application servers.

8. Now you should be able to access the application with http://icdcommon/maximo (you may need to restart your browser).

Thursday, September 8, 2016

Installing IBM Control Desk 7.6 on RHEL 6.5 in a test environment

CTGIN8264E : Hostname failure : System hostname is not fully qualified

And the reason it's a big hitch is because the error is misleading. You do need to have your hostname set to your FQDN, but you also need to have an actual DNS (not just /etc/hosts, but true DNS) A record for your hostname. If you don't already have one that you can update, you can install the package named:

The Berkeley Internet Name Domain (BIND) DNS (Domain Name System) server

It is available on the base Redhat install DVD.

Friday, July 1, 2016

Accessing the CTGINST1 DB2 Instance From the Command Line Processor

Monday, June 27, 2016

Installing the ICD Demo Content along with the ICD Process Content Packs

Do NOT try to install the 7.5.1 demo data into 7.6. It really doesn't work well. I'm leaving this post intact because the steps are useful in general I believe.

If you try to install the IBM Control Desk Content Packs along with the 7.5.1 Demo Content, you're going to have problems. I already addressed a standalone problem with the Demo Content in an earlier post, and now I've gotten further, so wanted to share the wisdom I gained.

No matter which order you install - Demo Content then Process Packs (specifically the Change Management Content Pack) or the other way around - you're going to encounter the following error:

One or more values in the INSERT statement, UPDATE statement, or foreign key update caused by a DELETE statement are not valid because the primary key, unique constraint or unique index identified by "1" constrains table "MAXIMO.PLUSPSERVAGREE" from having duplicate values for the index key.. SQLCODE=-803, SQLSTATE=23505, DRIVER=4.11.69

The cause for this is that the Change Management Content Pack and also the Service Desk Content Pack specify hard-coded values for PLUSPSERVAGREEID in the DATA\PLUSRESPPLAN.xml file, when the inserts should be creating and using the next value of the PLUSPSERVAGREESEQ sequence.

In finding the above root cause, it means that there are two possible solutions to the problem, depending on the order you install things.

If you install the Content Packs before the Demo Content

So in my first run, I installed the Content Packs first, and then the Demo Content (after modifying it as explained in an earlier post). And the exact SQL statement causing this problem was:

SQL = [insert into pluspservagree ( active,calendar,changeby,changedate,createby,createdate,description,hasld,intpriorityeval,intpriorityvalue,langcode,objectname,orgid,ranking,sanum,pluspservagreeid,servicetype,shift,slanum,calendarorgid,slatype,status,statusdate,slaid,slahold,stoprpifjportt,billapprovedwork) values (?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,nextval for PLUSPSERVAGREESEQ,?,?,?,?,?,?,?,?,?,?,0)]

parameter[1]=1

parameter[2]=BUS01

parameter[3]=MAXADMIN

parameter[4]=2007-10-12 12:33:55.0

parameter[5]=MAXADMIN

parameter[6]=2007-10-12 12:33:55.0

parameter[7]=P1 Incident - Respond in 30 mins. Resolve in 2 hrs.

parameter[8]=0

parameter[9]=EQUALS

parameter[10]=1

parameter[11]=EN

parameter[12]=INCIDENT

parameter[13]=PMSCIBM

parameter[14]=100

parameter[15]=SRM1002

parameter[16]=SLA

parameter[17]=BUSDAY

parameter[18]=SRM1002

parameter[19]=PMSCIBM

parameter[20]=CUSTOMER

parameter[21]=ACTIVE

parameter[22]=2011-09-14 13:26:13.247

parameter[23]=1

parameter[24]=0

parameter[25]=0

To find the constraint causing the problem, I found this page:

https://bytes.com/topic/db2/answers/810243-error-messages-key-constraint-violations

Which showed that I could find the particular constraint with the following SQL:

SELECT INDNAME, COLNAMES

FROM SYSCAT.INDEXES

WHERE IID = 1

AND TABNAME = 'PLUSPSERVAGREE'

That basically showed an index named SQL160607091434350 consisting of just the column named PLUSPSERVAGREEID.

So each row in the PLUSPSERVAGREE table should have a unique value in the PLUSPSERVAGREEID column.

Then to find the existing values in the PLUSPSERVAGREEID column of the PLUSPSERVAGREE table, run:

SELECT PLUSPSERVAGREEID from PLUSPSERVAGREE

For me, this showed values 1 through 16.

Now, looking at the sequence itself, I found that the last value assigned was 5 with this query:

SELECT LASTASSIGNEDVAL from sysibm.syssequences where seqname = 'PLUSPSERVAGREESEQ'

So to fix the problem, I altered the PLUSPSERVAGREESEQ sequence to start at 17:

ALTER SEQUENCE PLUSPSERVAGREESEQ RESTART WITH 17

After I did that, I tried again to install the Demo Content and it worked!

If you installed the Demo Content first

I take lots of snapshots of my VMs, so I could easily go back to a snapshot where I had already installed the Demo Content, to then try to install the Content Packs. That led me to see that the Change Management Content Pack has hardcoded values in the DATA\PLUSRESPPLAN.xml file (by downloading the ChangeMgtPack7.6.zip file and opening up the file). On the positive side, it appears that nothing else in the Content Pack actually references these hardcoded values, so we have the option of changing them as needed.In my particular case, I found that the following values in the PLUSPSERVAGREE table for the PLUSPSERVAGREEID column

9

10

11

12

13

14

24

25

I also found that the LASTASSIGNEDVAL for the PLUSPSERVAGREESEQ sequence was 25, so that matches up with the data.

The very lucky part for me is that there are exactly 8 rows that get inserted by the PLUSRESPPLAN.xml file, and the PLUSPSERVAGREE table doesn't have any rows with values 1 through 8!

So the solution I applied was I manually edited the PLUSRESPPLAN.xml file to set the PLUSPSERVAGREEID values to 1 through 8. Then I saved the edited file back into the zip file, created a valid ContentSource.xml file to point to it (so I could install from my local copy of the Content Pack), added my new Content Source to the Content Installer, and I was able to successfully install the Change Process Content Pack!

However, I then found that there's also a similar problem with the Service Desk Content Pack, but the same solution can't be applied. Specifically, in the Service Desk Content Pack, the DATA\SLA.xml file uses hardcoded values for the same column, but those values are 1, 3, 4 and 5, which I just used in my workaround for the Change Management Content Pack. So to fix this correctly, I looked in the Demo Content Content Pack to find out how to reference the PLUSPSERVAGREESEQ sequence, and it's actually not too bad.

So the fix I went through was to manually modify the DATA\SLA.xml file to change every element that looked like this:

<column dataType="java.lang.Long" name="PLUSPSERVAGREEID">

<value>3</value>

</column>

to this:

<column dataType="java.lang.Long" name="PLUSPSERVAGREEID">

<columnOverride>

<sequence mode="nextval" name="PLUSPSERVAGREESEQ"/>

</columnOverride>

</column>

Then like above, I saved the edited file back into the zip file, created a valid ContentSource.xml file to point to it (so I could install from my local copy of the Content Pack), added my new Content Source to the Content Installer, and I was able to successfully install the Service Desk Content Pack!

After installing, I checked the PLUSPSERVAGREE table again, and I saw that the values 26 through 29 were there, so I know my change worked.

So in my case I didn't have to change the start value for the PLUSPSERVAGREESEQ sequence, which is nice.

It was a painful afternoon, but well worth it in the end.

Wednesday, June 8, 2016

Installing ITIC and TDI on Windows Server 2012

On each file, right click and select Properties.

Then on the Compatibility tab, click the "Change settings for all users" button at the bottom.

In the "Compatibility mode" section, select "Run this program in compatibility mode for:" checkbox.

Select "Windows 7" from the drop down list.

Click OK, then OK again.

And now you're ready to install

UPDATE: You do also need to ensure that the java executable is in your path. If not, it will fail when trying to create the Java Virtual Machine.

UPDATE 2: And it MUST be the Java 1.7 executable in your path. 1.8 will fail.

Tuesday, June 7, 2016

Installing SmartCloud Control Desk 7.5.1 Demo Content on ICD 7.6

You can't do it

https://www.ibm.com/developerworks/community/wikis/home?lang=en#!/wiki/Anything%20about%20Tivoli/page/How%20to%20install%20the%20sample%20data%20during%20IBM%20Control%20Desk%207.6%20installation

These steps are supposed to get it installed after the initial install, but I tried twice and failed both times:

http://www.ibm.com/support/knowledgecenter/SSLKT6_7.6.0/com.ibm.mam.inswas.doc/mam_install/t_mam_create_maxdemo_postinstall.html

So if you want demo data, which you do in some number of test/dev environments, simply install it at initial install time. It goes very smoothly.

But you can, mainly, with a little work

Additionally, you will have other problems, such as the following error when you try to create a new WORKORDER:

And there's no easy fix. So the demo data will let you play around with a lot of functionality, but the system is pretty unusable for anything else after you install it.

Add an attribute to the TICKET table

Download the package

Edit the package

Define your local content and Install

Create an XML file called ContentSource.xml in the C:\temp directory on your Smartcloud Control Desk server system that contains the following text:

<?xml version="1.0" encoding="UTF-8"?>

<catalog infourl="" lastModified="" owner=""

xmlns:tns="http://www.ibm.com/tivoli/tpae/ContentCatalog"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation=" ContentCatalog.xsd">

<catalogItem>

<version>7.5.1</version>

<type>mriu</type>

<name>Enter the name of package</name>

<description>Enter a description of the package here</description>

<homepage/>

<licenseurl/>

<category>Describe the category of the content</category>

<url>file:////C:\temp\TestPackage.zip</url>

</catalogItem>

</catalog>Edit the name and description and the category according to the content that you are installing. Change the file name in the URL to the name of the content pack zip file.Save the file.Copy the content pack zip file to the C:\temp directory on the server.Go to the ISM Content Installer application: System Configuration>IBM Content Installer.Click the New icon.Enter the location of the ContentSource.xml that you created in step 1 and a description. The file name in our example is: file:////c:\temp\ContentSource.xml Click Save.Click the newly created content source.Click the download link to install the content.

You've now got a good amount of demo content

Monday, June 6, 2016

IBM Control Desk for Service Providers

Create a customer named ACME CORP

The Cust/Vendor field is farther down on the page.

Now create a user that is associated with that Person.

Now create a Security Group (SP) with any permissions you want, but specify "Authorize Group for Customer on User's Person record". I only granted Read access to the Assets application. And add your user to this group.

Here I'm logged in as the user, and can only see the one asset associated with ACME CORP.

Here I'm viewing the Licenses (SP) application for Adobe Acrobat and see that a license has been allocated to ITAM1010, which is the asset associated with ACME CORP.

Friday, April 22, 2016

Configuring ITIC for use with IBM Control Desk 7.6

FSNBUILD=7510

to

FSNBUILD=7600

Without this change, it's trying to find a file named IntegrationComposer7510.jar, which doesn't exist. In 7.6, the correct file is IntegrationComposer7600.jar.

Another thing to note is the URL of the BigFix server for use with the ITIC mapping is:

https://hostname-or-ipaddress:52311