UPDATE 6/3/2020

Another way to resolve this issue is to point to a local maximo.properties file as described here:

And put the mxe.report.birt.viewerurl property in that file.

Introduction

I recently encountered an issue in one of my ICD 7.6 installations where a global system property had an incorrect value set that I needed to change without rebuilding my MAXIMO.EAR file. This post is a description of the problem and my eventual "fix". It's just a test environment, and this is NOT a resolution that I would recommend for a production system. But I wanted to document the details to possibly help others in similar situations.

Problem

I installed ICD 7.6 and chose to use the maxdemo DB2 database script during configuration. This apparently set the mxe.report.birt.viewerurl global property to

http://myhostname.domain.name/maximo/reports/ , and that is an invalid value. This system property should either be unset or set to

http://myhostname.domain.name/maximo/report (with no trailing "s"). The problem that this causes is that any attempt to click on the "Run reports" action gives an HTTP 404 error.

http://myhostname.domain.name/maximo/reports/ , and that is an invalid value. This system property should either be unset or set to

http://myhostname.domain.name/maximo/report (with no trailing "s"). The problem that this causes is that any attempt to click on the "Run reports" action gives an HTTP 404 error.

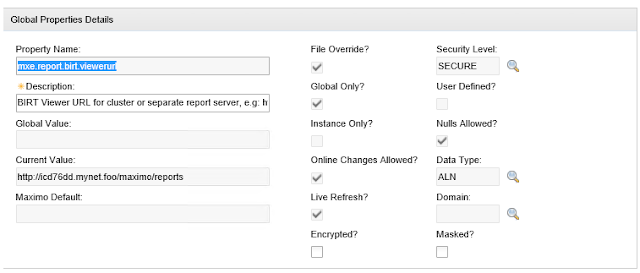

It took a while to run this down, but finally looking in System Configuration->Platform Configuration->System Properties showed me the setting for this system property:

Notice that I'm unable to modify the value AND "File Override?" is checked. So this means the value is set somewhere in the filesystem. Unfortunately, I couldn't find the value anywhere in any file on the system, so the only normal way around this is to modify maximo.properties on the Admin workstation, rebuild MAXIMO.EAR, then redeploy the EAR file. But I didn't want to do that for various reasons. Also, since "Global Only?" is set to true, I couldn't create an instance-specific property with the same name and different value.

My "solution"

I tried several different tactics, but the one that finally worked for me was to directly update the database to set "User Defined?" true for this property so I could then delete it and create an instance-specific property with the same name. The SQL command I used to make this change was:

update maxprop set userdefined=1 where propname = 'mxe.report.birt.viewerurl'

After running the above SQL command from a DB2 command prompt, I could then create an instance-specific property with the same name but with the correct value. Once I did that, I was able to successfully run all* BIRT reports.

All* reports?

Actually, the demo database script has at least one problem. Specifically, the CI named RBA_SERVER has a problem that causes the "CI List" report to fail. To get around this issue, you need to first find and delete the WORKORDER that references the RBA_SERVER CI, and then you can delete the RBA_SERVER CI. Once you delete that CI, you'll be able to successfully run the "CI List" report.