Background

This is a case where we helped a customer save quite a bit of money by using software they already owned rather than paying a large upcharge for additional licenses that they didn't need.

For any number of good reasons, your use case only calls for the free version of Elastic in your environment. In your environment, you also want to integrate alerts with your ticketing system. The challenge is that the free version of Kibana does not include a webhook connector for alerts. Only the Server log connector is available with the free license, whereas the Webhook connector (and others) are only available with the paid licenses.

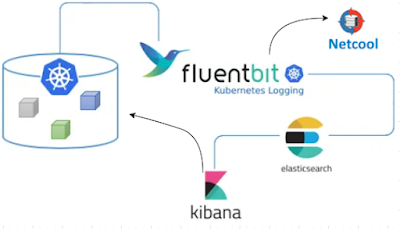

I have a customer in the above situation. An application they purchased is bundled in an appliance running a packaged Kubernetes distribution. The application also includes Fluent Bit for log collection into Elasticsearch. The initial challenge was to send alerts to their on-prem Netcool environment when certain log messages were written. We helped them meet this challenge using the webhook output of Fluent Bit to send the appropriate messages to the Netcool message bus probe, which would then create an incident in their ticketing system for each of these alerts.

Their next requirement was to only create incidents based on some aggregation of log messages. Specifically, they obtained several Elasticsearch queries from the vendor that should be used to generate incidents. This is really straightforward when using one of the paid Elastic licenses because you can simply write a rule with the Elasticsearch query as a condition and the built-in webhook connector to define an action that sends a message. With the free license of Kibana, that connector isn't available.

My Solution

The trick to the solution in this case is to just use the Server Log connector in Kibana to write a specifically-formatted message to the log when the Elasticsearch query condition is met. The message can be similar to:

CREATE_INCIDENT Vendor Query X has breached the prescribed threshold. Take action Y to correct.

This message is written to the log file for the Kibana pod, which is already being tail'ed by Fluent Bit. So we just needed to create a FILTER in Fluent Bit to match this log message and route that to the message bus probe.